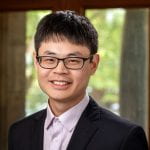

Speaker: Dr. Charles McEnally – Yale University

Speaker: Dr. Charles McEnally – Yale University

Date: Sep 13, 2024; Time: 2:30 PM Location: PWEB 175

Abstract: The transition from fossil fuels to sustainable fuels offers a unique opportunity to select new fuel compositions that will not only reduce net carbon dioxide emissions, but also improve combustor performance and reduce emissions of other pollutants. A particularly valuable goal is finding fuels that reduce soot emissions. These emissions cause significant global warming, especially from aviation since soot particles are the nucleation site of contrails. Furthermore, soot contributes to ambient fine particulates, which are responsible for millions of deaths worldwide each year. Fortunately, soot formation rates depend sensitively on the molecular structure of the fuel, so fuel composition provides a strong lever for reducing emissions. Sooting tendencies measured in laboratory-scale flames provide a scientific basis for selecting fuels that will maximize this benefit. We have developed new techniques that expand the range of compounds that can be tested by reducing the required sample volume and increasing the dynamic range. This has many benefits, but it is particularly essential for the development of structure-property relationships using machine learning algorithms: the accuracy and predictive ability of these relationships depends strongly on the number of compounds in the training set and the coverage of structural features.

Biographical Sketch: Charles received a Ph.D. in Mechanical Engineering from the University of California at Berkeley in 1994, where he studied with Catherine Koshland and the late Robert Sawyer. Since then, he has been in the Chemical Engineering Department at Yale University where he works with Professor Lisa Pfefferle. His research interest is combustion of sustainable fuels.